Intro

There are still a lot of misunderstandings about Oracle sequences. Sometimes even experts tell you things about sequences that are easy to misunderstand, especially if we look into the details. The following post wants to give a detailed overview about what are sequences, why they work as they do, and how we should use them.

There are also a lot of parameters that the sequence object has and that you can use to tweak the behaviour. I will cover the most common things here.

Wording

Many of the misunderstandings come from how we use the word “sequence”. It can mean several slightly different things.

meaning a) The sequence object in the database aka the number generator

meaning b) the number value that is retrieved via mySeq.nextval

meaning c) an attribute for a list of numbers, stored typically in an ID column

“This list is in sequence” often means that we have an ordered list of numbers without gaps (math: monoton increasing integer values).

For the remainder of the document I will try to make always clear which meaning I am referring to. The relevant words will be written in italics to hint about the specific interpretation in that sentence. In cases where I say “sequence” without additional specification details, I will mean the sequence object.

Purpose

The most common sequence usage is as technical values for ID columns. A typical ID column is a surrogate key. Opposed to a natural key, a surrogate key has no intrinsic meaning. It’s only use is to identify (=ID) a database record in a table. No intrinsic meaning also implies that we can not use this ID value to make business decisions dependend on it.

For example the following sentence should be considered a wrong deduction.

“Employee ID=17 was hired before Employee ID=26 because he/she has a lower ID”.

If we want to make qualified statements, then we must add the needed information to the data. For example add a column “hire_date”. Then we can use it to deduct when an employee was hired and what the order among different employees is.

The main advantage of a surrogate (meaningless technical) key is that the database can use it to ensure referential integrity. And this integrity rule is ensured even if something changes with regards to the business key. Typically business keys do not change. But if it happens, then the relationship is ensured by the foreign key still pointing to the surrogate key. For example we might have an INVOICE table. The business key might be the invoice number. In general this number is immutable, however it could be that there was some typo or scanner fault while the invoice was registered into the system. Using a surrogate key it is possible to change this invoice number without having to change all dependent records (like invoice positions) as well.

One of the best ways to supply values for such a surrogate key column (ID) is to use a sequence object and call the NEXTVAL function (pseudocolumn) on it. We can do that with a database trigger, as an identity column or directly in an insert statement.

Usage

standard usage

The standard usage of a sequence simply is to provide values for an ID column in the most performant way.

If you are new to the concept of Oracle sequences, then I suggest to go to livesql.com and try out the next few examples there by yourselfs. Experienced developers might want to skip those basic examples.

A) sequence + nextval on insert

First create a sequence using all default settings. We then use this sequence to provide ID values for our super-employees.

create table super_emp

(id number primary key,

first_name varchar2(100),

last_name varchar2(100),

hire_date date);

create sequence emp_seq;

Then call nextval directly in an insert statement

insert into super_emp (id, first_name, last_name, hire_date)

values (emp_seq.nextval, 'Peter', 'Parker', trunc(sysdate));

insert into super_emp (id, first_name, last_name, hire_date)

values (emp_seq.nextval, 'Clark', 'Kent', trunc(sysdate));

1 row inserted.

1 row inserted.

The NEXTVAL pseudocolumn was used directly in the values section of the insert statement.

B) before row insert table trigger

Create a table trigger that fires during insert (pre 12c solution)

create or replace trigger trg_emp_bri

before insert on super_emp

for each row

begin

if inserting then

if :NEW.ID is null then

:NEW.ID := emp_seq.nextval;

end if;

end if;

end;

/

The Oracle SQL Developer has a very nice wizard that helps to quickly create such a trigger. The table context menu (rightclick) has an entry to create a PK trigger based with a sequence. It creates a trigger very similar to the one above (I removed a select from dual in favour of a direct assignment).

Then insert into the table using either a NULL value or without the ID column.

insert into super_emp (id, first_name, last_name, hire_date)

values (null, 'Tony', 'Stark', trunc(sysdate));

insert into super_emp (first_name, last_name, hire_date)

values ('Bruce', 'Wayne', trunc(sysdate));

1 row inserted.

1 row inserted.

This is very nice. The application code that does the insert does not need to bother with the name of the sequence.

The trigger fires once FOR EACH ROW that is inserted. The code executes slightly BEFORE the row data is inserted. Before row triggers are typically used to set default values for columns or do some more complicated checks. After row triggers also exists. They are usually used for monitoring purposes, like writing data into an audit trail.

C) Use the sequence in the column definition (since 12c)

Since 12c we have two new options. Create a column AS an IDENTITY column or set the default value for the column to sequence.NEXTVAL. Both options can be configured to work only ON NULL. In case of an identity column, Oracle will automatically create a sequence. More about this in the chapter “identity columns”. Here is an example using the default setting.

The table trigger from B) is not needed anymore, so we can drop it.

alter table super_emp modify id default on null emp_seq.nextval;

drop trigger trg_emp_bri;

Then run the inserts.

insert into super_emp (id, first_name, last_name, hire_date)

values (null, 'Diana', 'Prince', trunc(sysdate));

insert into super_emp (first_name, last_name, hire_date)

values ('Steve', 'Rogers', trunc(sysdate));

1 row inserted.

1 row inserted.

Before 12c it was not possible to use pseudocolumns or non-deterministic functions like sysdate as a default value for a column. With 12c this is possible now. The result is the same as with a before row trigger, but usually it is noticably faster when we insert multiple rows.

Check the results

select id, first_name, last_name from super_emp;

| ID |

FIRST_NAME |

LAST_NAME |

| 1 |

Peter |

Parker |

| 2 |

Clark |

Kent |

| 3 |

Tony |

Stark |

| 4 |

Bruce |

Wayne |

| 5 |

Diana |

Prince |

| 6 |

Steve |

Rogers |

All inserts were done successfully. All three methods work.

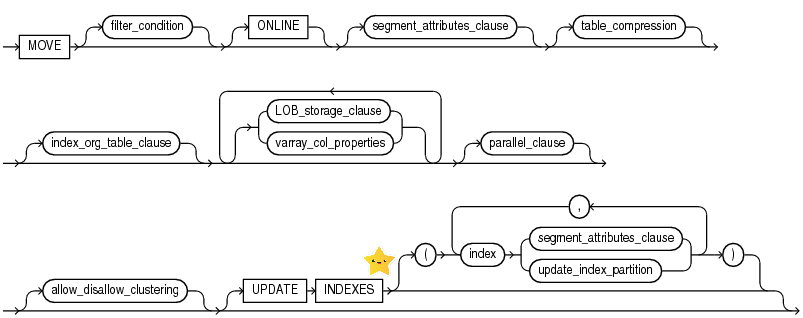

18c create sequence parameters

syntax diagram

Some basic stuff first

Here we go through the different parameters. Behind some of those are very complex concepts. If so, those concepts are explained in a later section. This basic section tackles the way how to set the parameter and the immediate effects of setting or not setting it.

INCREMENT BY vs. START WITH

START WITH says what the very first value will be. It can be negative.

INCREMENT BY says how the next value will be calculated. It can also be negative but not 0.

The syntax diagram is slightly misleading. It gives the impression as if we can only specify one during the creation. Either INCREMENT or START WITH, but not both. This is not true, we can create a sequence and specify both. The default for both is 1.

create sequence testseq increment by 10 start with 2;

select testseq.nextval from dual connect by level <= 3;

NEXTVAL

2

12

22

Other parameters like CYCLE and NOCYCLE can not be specified both at the same time. The syntax diagram is correct for those.

For the reminder of this document, we assume the increment is always 1 (unless clearly mentioned otherwise)

Note that we can not alter the START WITH value, but we can alter the INCREMENT BY.

Hint: The undocumented RESTART clause allows to set a new START WITH value. See section about “How to reset a sequence”.

MAXVALUE and MINVALUE

Typically we don’t have the need to set those two parameters, the defaults are good.

Facts

CYCLE vs. NOCYCLE (default)

CYCLE specifies, that the sequence after it reached the MAXVALUE, will start again with the MINVALUE (not with the START WITH value). The theoretical maxvalue of a sequence is 28 digits. It is a bit less with scalable sequences.

Nowadays there is no compelling reason to use CYCLE.

I believe in the old days (1990 – Oracle 7) disc space was still a premium commodity. Therefore number columns were often limited to a low number of digits (5 or 6). Under certain specific circumstances a cycling sequence then might have been useful to prevent numeric or value errors. Those days are gone.

CACHE (default) vs. NOCACHE

Caching a sequence is a huge performance feature. The default setting is CACHE 20, which is good for most scenarios. It means 20 sequence values are read from shared memory (SGA) instead from hard drive. And after that the dictionary will be updated one time.

See the section about caching considerations for more information about this very important parameter.

Demo:

create sequence mySeq cache 1000;

select sequence_name, cache_size, last_number

from user_sequences

where sequence_name ='MYSEQ';

SEQUENCE_NAME CACHE_SIZE LAST_NUMBER

MYSEQ 1000 1

select myseq.nextval from dual connect by level <= 3;

NEXTVAL

1

2

3

select sequence_name, cache_size, last_number

from user_sequences

where sequence_name ='MYSEQ';

SEQUENCE_NAME CACHE_SIZE LAST_NUMBER

MYSEQ 1000 1001

After this, we still have 997 cached sequence values.

The default value of cache 20 is a kind of sweet spot for OLTP purposes. Only when you have the need to create a very large number of sequence values in a short time, then consider to increase the cache. This typically happens during data load situations. Don’t forget to lower the cache value again after the data load is over.

ORDER vs. NOORDER (default)

It is a common misconception that we need to specify ORDER to get ordered values from a sequence object. The sequence object will always produce ordered values! Oracle did not implement some kind of random mechanism. Sequence.nextval will always give you the last value + the increment. Any kind of “randomness” comes from other things, like that you seem to have no control over who fetched the last value (multi user), when was that value inserted (seq.nextval call < insert time < commit time) and lost sequence caches.

The ORDER setting is only relevant in a RAC (Real Application Cluster) environment. And even there it should always be NOORDER (the default). Read the chapter about the performance considerations for an explaination.

Remember

ORDER on RAC = slow

ORDER on non-RAC = no effect

easy.

KEEP vs. NOKEEP (default)

This is a switch that most database developers will never need. It might be more relevant for Java developers.

In 12.2 a new feature called application continuity was introduced. It allows to capture and replay a certain workload on the database. It comes with the license options for RAC or Active Data Guard.

Problem is that a call to sequence.nextval would deliver a new value. This is not wanted for REPLAY purposes. Altering a sequence to KEEP would provide the same sequence value during the replay.

From the appendix of Oracles White paper about Application continuity:

Mutable Functions

Mutable functions are functions that can change their results each time that they are called. Mutable functions can cause replay to be rejected because the results visible to the client can change at replay.

Consider sequence.NEXTVAL that is often used in key values. If a primary key is built with a sequence value and this is later used in foreign keys or other binds, the same function result must be returned at replay.

Application Continuity provides mutable value replacement at replay for Oracle function calls if GRANT KEEP or ALTER.. KEEP has been configured.

If the call uses database functions that support retaining original mutable

values, including sequence.NEXTVAL, SYSDATE, SYSTIMESTAMP, and SYS_GUID, then, the original values returned from the function execution can be saved and reapplied at replay. If an application decides not to grant mutable support and different results are returned to the client at replay, replay for these requests is rejected.

Important to remember is that the KEEP parameter during creation has nothing to do with keeping the sequence pinned in the SGA. An example how to do that is in the “discussion about gapless IDs” section.

SCALE vs. NOSCALE (default)

SCALE is a very interesting new setting. It allows to improve the clustering factor of the index on the ID column. More details about that in the performance section.

Useing SCALE adds the session ID (SID) to the beginning of the sequence value.

SCALE has two options EXTEND and NOEXTEND (default). See how it works and differs.

create sequence myseq;

Sequence MYSEQ created.

NEXTVAL

--------------------------------------

1

alter sequence mySeq scale;

NEXTVAL

--------------------------------------

1017670000000000000000000002

alter sequence mySeq scale extend;

NEXTVAL

--------------------------------------

1017670000000000000000000000000003

For sake of brevity I removed the “sequence altered.” results and “select mySeq.nextval from dual;” calls.

My session ID in this demo was 767. The 101 is the instance ID (1) + 100. So in a RAC environment, this will ensure that the values provided by different nodes will not clash. On non-RAC systems this leading part should always be 101.

NOSCALE gives us a normal sequence value of 1.

SCALE NOEXTEND gives us a sequence value of 28 digits (MAXVALUE) with a 2 at the end (last value+increment by) and a 101767 at the beginning.

SCALE EXTEND gives us a sequence value of 28+6 digits with a 3 at the end (last value+increment by) and a 101767 at the beginning.

So EXTEND adds the additional digits on top of the MAXVALUE setting, whereas NOEXTEND adds it inside the range defined by MAXVALUE.

In most circumstances – if we consider scalable sequences – we should use SCALE NOEXTEND. Just to be sure, that the generated value still does fit into the table column. For very large tables if there are already some extremly high values, we might need to use EXTEND, but I expect this situation to be very rare.

When is this useful? Only for cases when extrem performance matters. So for large or very large tables, with a lot of inserts from multiple sessions (parallel inserts).

SESSION vs. GLOBAL (default)

Sequence values do not depend on a user session. Every call to sequence.nextval will give the next incremented value regardless of which session executed this. This feature ensures that nobody gets a duplicate key.

User/Session A calls mySeq.nextval 3 times and gets 1,2,3.

User/Session B calls mySeq.nextval 3 times and gets 4,5,6.

If both sessions fetch the values almost simultaniously then A might get 1,3,4 and B might get 2,5,6. Notice that there might be gap from the perspective of a single session, but the values are still ordered for each session.

With SESSION sequences this behaviour changes. Session A calls mySeq.nextval three times and gets 1,2,3. Session B calls mySeq.nextval three times and also gets 1,2,3. The values are not shared between sessions.

Where do we need this? Only for global temporary tables (GTT). The data in a GTT persists for the duration of a session (alternatively until commit) and then is gone. Same behaviour for the SESSION sequence – the generated sequence values only persist for the duration of the session.

Practical considerations

For most cases the default settings are perfect.

Only if you encounter issues (performance or unusual number of gaps) or if your data has some special scenarios (batch ETL jobs, very large number of rows) then you should start thinking about tinkering with the default settings.

The following sub chapters discuss common questions and show cases how to work with sequences to solve some typical tasks.

How to avoid reusing the same ID in different dbs

Sometimes we have a distributed database. Especially for global companies each region might have its own database. The data for the different regions still needs to be comparable. And sometimes the data will be consolidated or exchanged. In such cases it helps, if the ID values do not overlap.

One way to do that is to use the INCREMENT parameter. On database 1 we use a sequence object starting by 1 and and increment of 10. So this will give IDs like 1, 11, 21, 31, 41,….

create sequence testseq increment by 10 start with 1;

On Database 2 we use a sequence starting with 2 and increment 10. This will work up to 10 regions. So this will give IDs like 2, 12, 22, 32, 42, ….

create sequence testseq increment by 10 start with 2;

Result is that those values do not overlap. There are other (and possibly better) ways to solve the situation, like sys_guid(). But this is a fairly easy and stable concept.

Caching considerations

If the sequence is used very infrequently, then you can set it to NOCACHE. For example if you have an staff table; I don’t expect that new personell is hired every second. Typically it will be a few people per month (depends on the size of your company of cause). For such low frequency inserts performance doesn’t matter. You can set the sequence object to NOCACHE or to a very low cache value. However if you do a large data import, consider to increase the cache size before running that data load.

Does setting a larger cache size need more SGA memory?

No.

Or to explain it with Tom Kytes words

All we need to keep in the cache is:

the sequence on disk was N

the cache size is M

the current value is X

As long as X is less than N+M – we just increment X when someone calls NEXTVAL.

we do not need to keep in the cache “N, N+1, N+2, … N+M-1”, we just keep N, M and X and increment X when someone asks for a new sequence value. When X=M, we update SEQ$ and reset N in the cache.

So, cache 1000 and cache 20 take the same amount of space in the cache.

How to reset a sequence?

There are three general ways to set a sequence to a different value.

- Call sequence.NEXTVAL so often until you reach the target value

- Manipulate the increment parameter using a negative increment. Call nextval once. Reset.

- Restart the sequence (new undocumented feature)

The first way usually is not practical. A noticable exception might be, if you manually added some data without using the sequence and you want to jump over those few values.

If you want way 1, then the CONNECT BY LEVEL clause helps to do it quickly.

select myseq.nextval from dual connect by level <= 996;

And here is a demo for way 2:

Preparation setup

drop sequence mySeq;

create sequence mySeq cache 1000;

set autotrace traceonly statistics

select myseq.nextval from dual connect by level <= 996;

set autotrace off

select myseq.nextval from dual;

NEXTVAL

----------

997

The “set autotrace traceonly” command works in sql*plus. I used it here to avoid printing 996 values onto the screen. It is not relevant for the demo itself.

The current value now is 997 but we want that the next call to nextval should give us 1.

Now reset the sequence.

alter sequence mySeq increment by -996 nocache;

select myseq.nextval from dual;

alter sequence mySeq increment by 1 cache 1000;

After this code, the very first session that calls myseq.nextval, will see 2 as the value returned.

If we really need to see 1 we also must lower the MINVALUE. Because INCREMENT BY can not result in anything lower than the MINVALUE (ORA-08004: sequence MYSEQ.NEXTVAL goes below MINVALUE and cannot be instantiated).

alter sequence mySeq increment by -997 nocache minvalue 0;

select myseq.nextval from dual;

alter sequence mySeq increment by 1 cache 1000;

select myseq.nextval from dual;

Notice that we incremented now by -997 instead of -996 and that we are calling nextval twice. We can not reset the minvalue to 1 during the second ALTER sequence command, because that also would violate the rules (ORA-04007: MINVALUE cannot be made to exceed the current value). Easyiest solution is to let it stay at 0.

Using NOCACHE is important, to avoid having issues with the stored last_value. Also check the increment by and the cache setting, before you alter the sequence. If the increment by is different, then you need to change the above code and probably need to call nextval a second time.

In 18c we got a third option to reset a sequence – the RESTART option.

ALTER SEQUENCE mySeq RESTART;

This is currently undocumented.

Thanks to Roger Troller for makeing me aware about it (Blog).

I tested it a little bit further and found out two more things.

- We can already use it in 12.2.0.1. Which makes sense, since 18c is really just 12.2.0.2.

- And we can combine it with the START WITH clause.

So the following works !

alter sequence testseq_20 restart;

VAL1

1

alter sequence testseq_20 restart start with 15;

Sequence TESTSEQ_20 altered.

select testseq_20.nextval val1 from dual;

VAL1

15

Very convinient. This should be the preferred way to reset a sequence whenever you need to do that.

Not recommended is to drop and recreate the sequence. While this will also allow us to set a new START_WITH value, it has a major drawback. All references to the seqeunce are then broken. Especially all privileges are lost, like GRANT SELECT on #sequence to #schema.

Can we use an ID from a sequence to order by insert time?

Short answer no. The order of inserts and the order of sequenced values often match but are not guaranteed to match.

Detailed answer: Usually it works.

I very often use a ID column filled by a sequence as a second order criteria. For example I typically sort a logging table – where trace information is written – by the insert date and the LOG_ID (sequence based PK ).

order by insert_date desc, log_id desc

The insert date (if it is a date) is only accurate to the second. Even if it is a timestamp there might have been multiple inserts at the same fraction of a second. The log_id is a perfect second order criterium.

We can safely assume, that the inserts that were done from the same session, have ordered sequence values. There might be gaps, but the sequence values will be produced in the same order as we did the inserts. There can be ID values in between, that are from a different sessions. But for trace log information, usually it does not matter if a different session is ordered before or after our session. However the data from one session should be correctly ordered. And this is guaranteed.

Is cycling useful?

I never had the need of cycling sequences. I firmly believe if you think you need those, you have a much deeper problem somewhere else. It would probably better to solve that problem, instead of useing a cycling sequence.

With 18c we get SESSION sequences. For some cases where CYCLE was considered in the past, a SESSION sequence might be the better choice.

Also ROWNUM and the analytic function ROW_NUMBER can be used to create consecutive values at time of select, instead of a sequence providing those values at time of insert.

Discussion of gapless IDs

This is a problem/question I often encountered: How to make a sequence gapless?

TL;DR: You don’t need to. The effort and the restrictions to make an ID column gapless is to high in (almost) all use cases.

The sequence object can and does provide gapless numbers. In a multi user environment we just can not reliably use the provided values to store them in a gapless way. Even in a single user environment, the stored IDs could be deleted. So one consequence of the gaplessness requirement, would be to forbid delete operations.

The main point is that almost all the things that will create “holes” in an ID column are under our control. It is not the Oracle database that can not provide gapless sequences. It is the complexity of the business rules combined with performance requirements in a multi user environment, that make it almost impossible to have an ID column without potential gaps.

Performance + Multi User + Gapless IDs build a triangle of goals that exclude each other. We can not reach all three goals at the same time, one needs to suffer. However those goals do not react in the same way, when we sacrifice one a tiny bit. So let’s investigate what happens then:

We still can not reach good performance (instead of very high performance) if we need multi user capability and gaplessness at the same time. To enable this we need to serialize access to the whole table. Which in turn means only one session can write into the table and all other sessions will need to wait until the other session finishes the whole transaction.

We can have very high performance and gaplessness if we only have a single user. But as soon as a second user wants to write at the same time, we need to introduce severe serialization of the whole transaction, just to ensure gaplessness. And this means performance drops immensely. Btw. this is how MS Access works. Only one user can write into the so-called database.

But we can get almost gapless IDs and still have very high performance for multiple users. Almost gapless means, we sometimes might have gaps in our sequence, but this situation is rare. This is the default behaviour of Oracle sequences.

How do we get gaps in our IDs?

a) a record in our table was deleted.

b) the insert run into an error

(remember sequence.nextval is called a tiny moment before the insert is executed).

c) Somebody called sequence.nextval but didn’t use the value.

d) The sequence cache was lost. One way how this happens is if the database decides that other objects need to be in SGA memory and the sequence wasn’t called for a longer time.

By pinning the sequence we can avoid situations where the sequence cache ages out of the shared pool. A better alternative is to size the shared pool appropriately, so that in general sequence caches will not age out of it.

execute sys.dbms_shared_pool.keep(owner.mySequence,'Q');

This still doesn’t guarantee gapless IDs, but for most use cases it would be good enough.

The oracle docs about skipping cached numbers:

18.1 Database Admin Guide – Managing Sequences

The database might skip sequence numbers if you choose to cache a set of sequence numbers. For example, when an instance abnormally shuts down (for example, when an instance failure occurs or a SHUTDOWN ABORT statement is issued), sequence numbers that have been cached but not used are lost. Also, sequence numbers that have been used but not saved are lost as well. The database might also skip cached sequence numbers after an export and import. See Oracle Database Utilities for details.

A normal or immediate shutdown of the database will not loose sequence numbers. Instead the database will update the data dictionary (table sys.seq$) with the last used value. Unfortunatly most DBAs prefer to shutdown a database using abort, since they don’t bother enough about user sessions.

Sequence Performance

Why is a sequence fast and how can we use it in the most performant way?

Oracle invented sequences with performance in mind. They provide a way to create surrogate keys in a multi user environment while minimizing serialization.

Basic working of an Oracle sequence

A sequence is just an entry in the dictionary table sys.seq$.

desc sys.seq$;

Name Null? Type

---------- -------- ------------

OBJ# NOT NULL NUMBER

INCREMENT$ NOT NULL NUMBER

MINVALUE NUMBER

MAXVALUE NUMBER

CYCLE# NOT NULL NUMBER

ORDER$ NOT NULL NUMBER

CACHE NOT NULL NUMBER

HIGHWATER NOT NULL NUMBER

AUDIT$ NOT NULL VARCHAR2(38)

FLAGS NUMBER

PARTCOUNT NUMBER

The highwater column is the same as the last_number column in the view user_sequences.

When a sequence fetches a new sequence value (using .nextval) then the dictionary table needs to be read and the row needs to be updated with the new value. Now if multiple sessions do that, then one would have to wait for the other. This is called serialization. To avoid that issue, Oracle uses two clever mechanisms.

- The dictionary table is updated using an autonomous transaction. So the value is stored and other sessions can see it, even if the main transaction (from the user session) is not finished.

- The new highwater value that is stored, is not the next value, but it is the value + the cache. Any call to sequence.nextval will first read from the sequence cache and only once the numbers there are exhausted, it will read from the table and update it.

It is of cause possible to write a similar mechanism ourselfs with our own table. And I have seen projects where they did exactly that. But it is very hard to do properly and even then will not beat the performance of the original sequence. So you would need a very special business case to justify writing your own sequence mechanism.

Speed it up

If you aim for maximum performance there are some considerations to do.

- You must use a sequence cache. The cache size also plays an important role. For most OLTP tables the default setting of cache=20 is a very good choice. However when you do large dataloads, then a much larger cache size is advisable. There is a diminishing returns effect. Doubleing the cache does not double the performance.

- On a RAC you really should use NOORDER. The ORDER keyword is only relevant for real application clusters. Using ORDER would try to synchronize the sequence caches over all cluster nodes. This is extremly bad for the performance. Useing NOORDER gives each RAC node a separate sequence cache. Which also means that an insert on node1 might have sequence value 1 and the next insert on node2 might have a sequence value 1001. The third insert on node1 again would use value 2.

- Sequences should be used as late as possible. There is usually no need to fetch a sequence value first and then do the insert later. Use the sequence while doing the insert. Either by adding it to the insert statement, or via a database trigger or since 12c as an identity column or a default on NULL column setting. Using the 12c mechanics allows to avoid the database trigger. This results in much better performance, as I have shown in a previous blog post.

- Consider scalable sequences for large tables if you are on 18c already. The effect can not be seen immediatly, but scalable sequences should give a better and more stable performance in the long run.

For small and medium sized tables I expect scalable sequences to be slower than non-scalable sequences (because a bigger number needs to be stored). I didn’t test the effect, but a normal (small) sequence value only needs 2-6 bytes, wheras a scaled sequence value needs always 15 (NOEXTEND) or 18 (EXTEND) bytes. These bytes are used by the table column, the unique index that supports the primary key (PK), all foreign key (FK) columns pointing to the PK and the indexes supporting those FKs.

If you need the value of the sequence later in your code again you can either use .currval (not recommended) or use the returning clause to give you the generated ID.

best practice: returning clause

Several SQL and PL/SQL DML commands have a returning clause. It allows to get back data that is created or manipulated while the DML (insert or update) is running.

The most common usage is to return the ID value, that is filled by a database trigger (or an identity column) so that this ID can now be used furthermore in the same session or transaction or to be returned back to the client. For example to insert any child records or to show the freshly generated record in a GUI report.

insert into super_emp (first_name, last_name, hire_date)

values ('Bruce', 'Banor', trunc(sysdate))

returning id into :ID;

print :ID;

ID

--------------------------------------------------------------------------------

9

The returning clause is more typical in pl/sql. Here is an example using a record of %rowtype. We can even return the generated ID value directly into the record.

declare

r_super_emp super_emp%rowtype;

begin

r_super_emp.first_name := 'Hal';

r_super_emp.last_name := 'Jordan';

r_super_emp.hire_date := trunc(sysdate);

insert into super_emp

values r_super_emp

returning id into r_super_emp.id;

sys.dbms_output.put_line('New ID = '||r_super_emp.id);

end;

/

New ID = 10

Identity columns

Identity columns and Default on null are a great enhancements in db version 12.1.

It allows us to use a sequence as late as possible (while inserting). But without the need for a before row insert table trigger. This improves insert performance dramatically. A trigger is plsql based. It runs during the execution of a SQL DML statement (insert). Because of that a context switch from the SQL to the PL/SQL engine (and back) is needed. If we can avoid the trigger completly we can avoid the context switch and this will improve performance considerably.

I made some tests and under very favourible circumstances (nothing else inserted but the ID) the insert performance was 900% faster using IDENTITY or DEFAULT columns instead of a trigger.

With DEFAULT ON NULL we would still create the sequence by ourself. Which also means we know the name. With IDENTITY the sequence is automatically created and maintained by Oracle.

The name of the generated sequence will always begin with “ISEQ$$_”.

demo

create table test

( id number generated by default on null as identity (start with 20) primary key

);

select table_name, column_name, identity_column, default_on_null, data_default

from user_tab_columns;

| TABLE_NAME |

COLUMN_NAME |

IDENTITY_COLUMN |

DEFAULT_ON_NULL |

DATA_DEFAULT |

| TEST |

ID |

YES |

YES |

“SCHEMANAME”.”ISEQ$$_10707661″.nextval |

Drawbacks

It can be problematic to use identity columns over a database link. Especially if the ID value is needed. The main issue is that the returning clause does not work over a db link and there are no good alternatives for identity columns. This works slightly better with “default on null”. We know the sequence object and can use it to fetch the id value over a DB link first and use it then later for the insert. Not performant at all, but it works.

We also can not directly alter an existing ID column into an IDENTITY column. Although it is possible to modify an existing identity column (for example switching between generated always and generated by default on null).

Another minor inconvinience is that the system generated sequence will still be there when the table is dropped. At least as long as the table is still in the recycle bin.

There were also some other very special bugs using identity columns. All have workarounds, but my experience is, that default on null is slightly less error prone.

Index contention and Scalable Sequences

Scalable sequences where secretly introduced in 12.1.0.1 but only documented in 18.1.

Richard Foote did a three part series about scalable sequences that covers all you need to know.

The basic problem has to do with index contention.

To give a very brief explanation: when we have an ID column that is inserted using a sequence the index -over time- will become unbalanced. Because new values will only be added to the right side of the index leaf block splits will happen there frequently. Sometimes it will be 50-50 block split and the space in those blocks usually will not be filled up. This eventually leads to a heavily right (un)balanced index tree.

Such a block split is a very ressource intensive operation and other sessions will need to wait for it. If you see a high number of “enq: TX – index contention issue” wait events (check MOS 873243.1) the reason could be those index block splits.

One workaround for the index contention problem in the past was to use a REVERSE KEY index. But this created other performance problems, like the CBO will not do any range scans on that index.

Scalable sequences are a slightly better solution to avoid those index contention issues (hot index blocks). Because they have the session ID in front of the number, values provided by a scalable sequences are distributed more evenly over the index. At least as long as multiple sessions do the insert.

Export and Import

consistency issues

When you do an export of a database or a schema it is crucial to do a time consistent export using

exp ... consistent=Y ...

Why? Otherwise the sequence object including the current value as start with is exported first. And later the tables with their data. Which means, that in between some session could call sequence.nextval and use up a value. You won’t notice the issue during import. But as soon as an insert in the imported schema happens, you will get an dup_val_on_index error, because the table has an ID value already, that the sequence generator just provided.

sys warning

Consistent=Y does not work as SYS. So never export data as SYS! The reason is that sys can not do read only transactions. Using SYSTEM is fine.

datapump

For datapump the equivalent to consistent=Y is the flashback parameter.

expdp ... flashback_time=systimestamp ...

There is also a flashback_scn parameter. Both do a time consistent export.

And since 11.2 there is a legacy mode for datapump, which allows to use consistent=Y (it is rewritten into the flashback_time parameter).

Other ways to generate ordered numbers

Sometimes a sequence is not the best way to generate ordered numbers. For example when we want to sort entries from a detail table based upon their parent keys. Each detail record should start with 1 for each parent entry. A sequence is not the proper tool to get such values.

Alternatives are ROWNUM, the analytical function ROW_NUMBER() and certain ways to create lists in SQL, for example by using hierachical queries with CONNECT BY.

Further reads