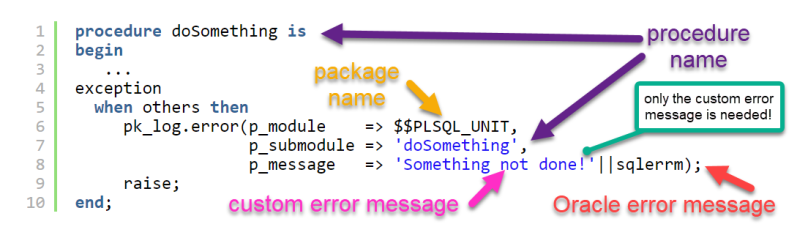

Does your error logging logic still look like this?

example 1 – old school handler

procedure doSomething is

begin

...

exception

when others then

pk_log.error(p_module => $$PLSQL_UNIT,

p_submodule => 'doSomething',

p_message => 'Something not done!'||sqlerrm);

raise;

end;

Here is what I prefer!

example 2 – compact handler

procedure doSomething is

begin

...

exception

when others then

pk_log.error('Something not done!');

raise;

end;

Such code is way more convinient! Of cause this will store the same information as the previous example 1.

To get there some parts have to be moved into the logging method (pk_log.error).

sqlerrm

is the error message

When moving, it should also be improved (at least usedbms_utility.format_error_stack)$$PLSQL_UNIT

is the name of the package (or of the standalone procedure or function)- ‘doSomething’

is the hardcoded name of the procedure

It needs to be gathered dynamically at runtime inside the logging package.

The biggest issue is 3.) – to find the name of the module at runtime. The function who_called can solve that. It also gathers the package name on the way, so 2.) is also covered.

Side note: Is hardcoding the procedure name a problem?

Not really. However if the name changes one needs to change it in several lines. And in case one forgets to change the error handler, the compiler will not issue an error. Then the problem might go unnoticed for some time and could be misleading in case of exceptions.

I have two versions of the who_called function. The first standalone version is not recommend to use. Instead use the packaged version. However the first version demonstrates the implemented logic in a clear way.

Both functions will find the full name of the module that called this code block. The default behaviour is that modules from the same package are ignored. This allows easy usage inside packages ment for logging, auditing or testing. Everything that is instrumentation code can usually profit from this little helper.

Usecase 1 – demo purposes – standalone function

This logic assumes that we use the function to find out who called the procedure that is currently running. So not the name of the procedure itself, but the name of the caller.

Installation code

-- Cleanup

-- drop function who_called;

-- Usecase 1: standalone function - simple demo version

-- check the call stack to find the calling module.

create or replace function who_called return varchar2

is

v_caller utl_call_stack.unit_qualified_name;

v_name varchar2(128);

begin

-- print the call stack just for demo purposes

for i in 1..utl_call_stack.dynamic_depth loop

sys.dbms_output.put_line(

rpad('+',utl_call_stack.dynamic_depth-i,'+')||

utl_call_stack.concatenate_subprogram( utl_call_stack.subprogram(i) )

);

end loop;

-- step backwards though the call stack

-- 1 = current module = who_called function

-- 2 = the module that calls who_called

-- 3 = the caller!

v_caller := utl_call_stack.subprogram(3);

v_name := utl_call_stack.concatenate_subprogram(v_caller);

return v_name;

end who_called;

/

example 3 usage

set serveroutput on

-- Testcase 1.1 anonymous block

declare

procedure DoSomething as

begin

dbms_output.put_line('I want to doSomething!');

dbms_output.put_line('Who called? '|| who_called);

end;

procedure DoMore as

begin

doSomething;

dbms_output.put_line('I want to doMore!');

dbms_output.put_line('Who called? '|| who_called);

end;

begin

doMore;

end;

/

output

I want to doSomething! +++WHO_CALLED ++__anonymous_block.DOSOMETHING +__anonymous_block.DOMORE __anonymous_block Who called? __anonymous_block.DOMORE I want to doMore! ++WHO_CALLED +__anonymous_block.DOMORE __anonymous_block Who called? __anonymous_block PL/SQL-Prozedur erfolgreich abgeschlossen.

Each time the function is called, it prints the full call stack to the screen. This is just for demonstration purposes.

The first time the function who_called is executed is from inside the submodule doSomething.

The call stack at this point looks like this

| Position in Stack | Module |

| 1 | who_called |

| 2 | doSomething |

| 3 | doMore |

| 4 | __anonymous_block |

Line 5 in the anonymous block is the line with the who_called function (call stack 1). It is part of the doSomething procedure (call stack 2). On position 3 of the call stack we always find the caller – here the procedure doMore that did execute doSomething.

The second time the function is called is in the doMore procedure. And the call stack looks like this:

| Position in Stack | Module |

| 1 | who_called |

| 2 | doMore |

| 3 | __anonymous_block |

In this case the caller is the anonymous block.

The example clearly shows that we can fetch the name of any module in the call stack (including anonymous blocks). We just have to fetch the name from the proper level in the call stack.

Usecase 2 – error and trace logging – packaged version

This works a bit differently when used inside a larger package that is used to instrument the code base. Typically we can add error logging logic, and logging debug (trace) calls. In that scenario, we want to find out the name of the module where the trace or error logging call is made.

The logic assumes that we have a package used for this instrumentation purpose only. Which also means, that all calls inside this package can be ignored. So essentially we want to find out what is the full name of the procedure (or function) where the log error or the log trace call is done. The full name can be something like package.module.submodule.subsubmodule.

Installation code

When testing this, make sure you do not have/own a package pk_log already. This would overwrite it.

If you like it copy the code for the who_called function into your own instrumentation package.

-- Cleanup

-- drop function who_called;

-- who_called packaged version

-- check the call stack to find the calling module.

-- calls from this package are not considered

create or replace package pk_log as

/* this is a very simplified example for error logging

only demonstration purposes of the WHO_CALLED function

your error logging logic must be better!

*/

procedure error(message in varchar2);

procedure trace(message in varchar2);

end pk_log;

/

create or replace package body pk_log as

function who_called return varchar2

is

-- author: Sven Weller, 2021, syntegris information soultions GmbH

v_pkg varchar2(128) := $$plsql_unit; -- name of the package that holds the who_called function

v_caller utl_call_stack.UNIT_QUALIFIED_NAME;

v_name varchar2(128);

begin

-- step backwards though the call stack

-- 1 = current module = who_called function

-- 2 = the module that calls who_called

-- 3 = the caller!

-- we could start later (level 3 or even 4), if we knew exactly how many other submodules are always in the call stack

-- This might be risky if function inlineing is possible, but that can be prevented by a pragma. Example in proc error

for i in 2..utl_call_stack.dynamic_depth loop

v_caller := utl_call_stack.subprogram(i);

v_name := utl_call_stack.concatenate_subprogram(v_caller);

-- at lexical depth 1 we find the name of the package

if v_caller(1) != v_pkg then

-- In some scenarios, we might want to go even one step upward the call stack. Typically not for error logging purposes.

-- In such cases we could simply fetch one more name from the call stack

-- v_caller := utl_call_stack.subprogram(i+1);

-- build the full name, but only if we found the caller

v_name := utl_call_stack.concatenate_subprogram(v_caller);

-- no need to go further through the call stack

exit;

end if;

end loop;

return v_name;

end who_called;

procedure writeMessage(message in varchar2, message_type in varchar2) as

begin

case message_type

when 'E' then

sys.dbms_output.put_line('ERROR at ' || who_called);

when 'T' then

sys.dbms_output.put_line('Info from ' || who_called);

else

sys.dbms_output.put_line('ERROR! Unknown Message Typ ' || message_type || ' in '||who_called);

end case;

sys.dbms_output.put_line(message);

if message_type = 'E' then

-- Add full error stack

sys.dbms_output.put_line(dbms_utility.format_error_stack);

-- Add line where the error happened

-- Only the last line from the backtrace is relevant, all other lines are already in the error stack

-- compare output to sys.dbms_output.put_line(dbms_utility.format_error_backtrace);

sys.dbms_output.put_line(' in '||nvl(UTL_CALL_STACK.backtrace_unit(utl_call_stack.backtrace_depth),'anonymous block')

||' at line '||sys.utl_call_stack.backtrace_line(utl_call_stack.backtrace_depth)

);

end if;

end writeMessage;

procedure error(message in varchar2) as

pragma autonomous_transaction;

begin

pragma inline(writeMessage,'NO'); -- we don't want to inline in case of optimization_level = 3.

writeMessage(message,'E' );

end error;

procedure trace(message in varchar2) as

pragma autonomous_transaction;

begin

writeMessage(message,'T' );

end trace;

end pk_log;

/

example 4 call package, log trace and log error

set serveroutput on

-- Testcase 2.1 anonymous block

declare

procedure DoSomething as

begin

pk_log.trace('I want to doSomething!');

end;

procedure DoMore as

begin

doSomething;

raise no_data_found;

end;

begin

doMore;

exception

when others then

pk_log.error('I wanted to do more, but this happend!');

raise;

end;

/

output

This is the dbms_output (the simplified trace and error logs).

Info from __anonymous_block.DOSOMETHING I want to doSomething! ERROR at __anonymous_block I wanted to do more, but this happend! ORA-01403: no data found ORA-06512: at line 10 in anonymous block at line 13

The output is followed by the exception from the final RAISE; of the block (from SQL developer).

Fehler beim Start in Zeile: 4 in Befehl -

declare

procedure DoSomething as

begin

pk_log.trace('I want to doSomething!');

end;

procedure DoMore as

begin

doSomething;

raise no_data_found;

end;

begin

doMore;

exception

when others then

pk_log.error('I wanted to do more, but this happend!');

raise;

end;

Fehlerbericht -

ORA-01403: no data found

ORA-06512: at line 17

ORA-06512: at line 10

ORA-06512: at line 13

01403. 00000 - "no data found"

*Cause: No data was found from the objects.

*Action: There was no data from the objects which may be due to end of fetch.

In SQL Plus we get one more line, because of the additional raise in line 17.

There is something special in the code. The last line from the error backtrace is captured and returned. "in anonymous block at line 13" .

This last line is missing in the error stack, which means it is usually not returned when you only store dbms_utility.format_error_stack.

To add this last line utl_call_stack.backtrace_line(utl_call_stack.backtrace_depth) is executed. All other lines from the error backtrace are already in the error stack (depends on DB version) – so those can be ignored.

Conclusion

For my purposes it works fine and the resulting code is very clean.

I currently use it in an audit package based upon Connor McDonalds Audit Generator which was massively reworked for some custom needs. Maybe I publish this one day. But that is another story.

who_called advantages

- Way less to type – less mistakes

- much easier to see what the error handler does

- concentrate coding effort on the important bits – mostly the additional information that should be added to the error

who_called disadvantages

- A tiniest bit slower than hard coding the package and submodule name

=> For exception handling this is not relevant.

=> In huge debug or trace scenarios this time could add up if extreme performance is needed. Conditional compiling can be applied to remove instrumentation calls if you compile for extreme plsql performance. - since part of the logic is hidden other developers might wanted to add the module name into the message

- module name is fetched at run time, not at compile time

=> this can be an issue, especially if optimization level 3 is used. Function inlineing (check the compiler warnings) is a method that the plsql optimizer can choose to speed up logic. However it also means that the function at runtime will not exist anymore. And utl_call_stack will not be able to report the name of the module correctly. Note that there is a compiler warning that inlining happend (which usually is a good thing).

Feel free to adapt this code snippet to your special needs. I’m convinced this will help you to write simpler and more efficient error handlers.